Null Pointer References: The Billion Dollar Mistake

You have a function that takes 3 parameters, but 2 of them are optional. If you call that function with 2 parameters, does that make that 3rd parameter null or undefined?

Just a little pop quiz. The answer is undefined.

What is the difference between the two values?

- null is an object that is a assigned where the null value is a member of every type, defined to have a neutral behavior that has no value

- undefined is the evaluation of a variable or value that has been declared but not yet been assigned

While undefined has been in existence since the creation of coding, null is the misguided invention of British computer scientist Tony Hoare (most famous for his Quicksort algorithm) in 1964, who coined his invention of null references as his “billion dollar mistake”.

The history of the idea sprouted from Tony’s employment in 1960 at Elliot Brothers, London Ltd. when he had been tasked with creating a new programming language. During that time, most software was still written in machine code, but trying to move towards a high-level language at the time meant that debugging could not step through machine code.

To shield customers from implementation details, program errors at a high level, instead of a hexadecimal core dump. To generate an error message, a machine would use an array to check whether its references was in the bounds of the program. Such a method significantly slows down the speed of a program and eats up the available space allocated for a program — what customers didn’t know was that they could trade safety for speed.

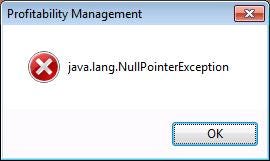

Years later, creators of the Java language decided to replicate the decision for bound checking arrays. Object-oriented programming introduced objects, which could be referred to as a pointer.

Since the creation of Java, the way compilation checks static types in a program had improved quite a bit, so Hoare invented the null pointer at the request of a customer who wished to turn off the ability to check types. During that time, Fortran programmers preferred to risk disaster over checking subscripts (having to check every reference so a program doesn’t crash).

Hoare’s friend, Edsger Dijkstra, pointed out that a null pointer reference could be a bad idea. Comparing a null pointer reference to a promiscuous adulterer he noted that the null assignment for every bachelor represented in an object structure “will seem to be married polyamorously to the same person Null”.

Regardless, Hoare chose the ability to run code quickly over safety checks. Introducing null opened the possibility to allow programs to compile and crash on runs, allow memory leaks, and worse, cause security issues in the code. But it wasn’t until C’s get methods that allowed early viruses to infiltrate by overwriting the return values of the code — essentially teaching the world how to write malware.

The CodeRed virus, a computer worm that infiltrated world-wide companies, brought down all the networks. The interruption to business and all the ordinary banking, other business was estimated to cost the world economy 4 billion dollars. Later on, the Y2K bug, a class of bugs related to the storage and formatting calendar data, was estimated to cost a little less than 4 billion dollars according to Tony Hoare.

The Takeaway

Some lessons we can take away from Tony Hoare’s experience, as defined by Tony Hoare’s presentation in 2009 at QCon:

- Null references have historically been a bad idea

- Early compilers provided opt-out switches for run-time checks, at the expense of correctness

- Programming language designers should be responsible for the errors in programs written in that language

- Customer requests and markets may not ask for what’s good for them; they may need regulation to build the market

- If the billion dollar mistake was the null pointer, the C `gets` function is a multi-billion dollar mistake that created the opportunity for malware and viruses to thrive

If you enjoy my work, follow me on Patreon!

Get the latest updates on my work on Patreon — https://www.patreon.com/AmandaHinchman